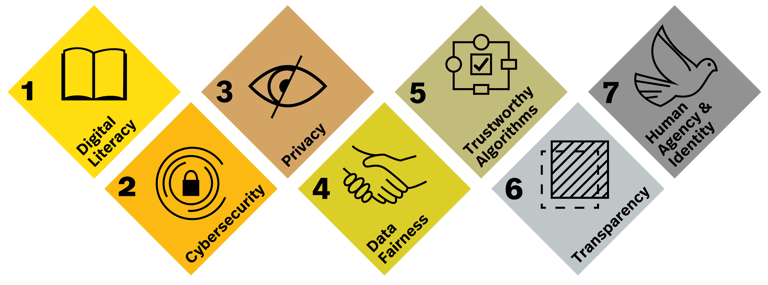

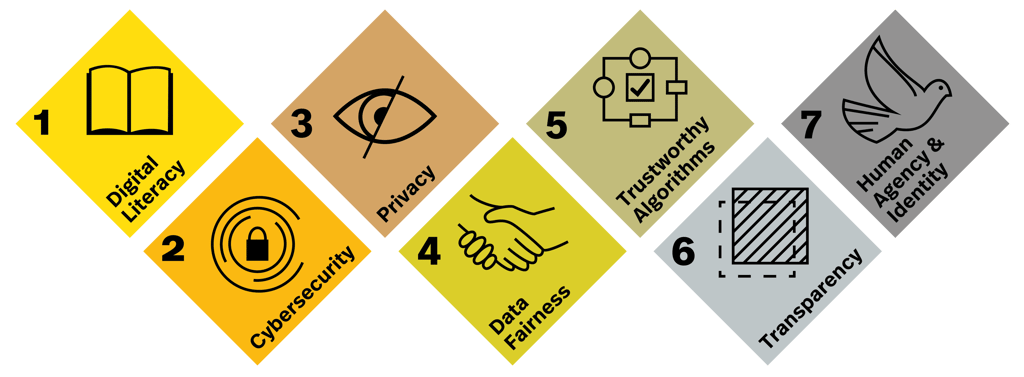

The seven Digital Responsibility Goals (DRGs) are guiding principles for a better digital future. They were developed in collaboration with digital experts from various fields. Designed to reduce complexity in our increasingly digitised lives they are able to make responsible behaviour in the digital world visible, comparable, and comprehensible. Similar to how the UN Sustainable Development Goals (SDGs) enable a common agenda for a more sustainable planet, the DRGs seek to promote digital technologies embedded in universal rights and values.

DRGs at a glance

Cybersecurity protects systems against compromise and manipulation by unauthorized actors and ensures the protection of users and their data - from data collection to data utilization. It is a basic prerequisite for the responsible operation of digital solutions.

Privacy is part of our human dignity and a prerequisite for digital self-determination. Protection of privacy allows users to act confidently in the digital world. Privacy by design and by default enable responsible data usage. Users need to be in control and providers must account for how they protect privacy.

Non-personal data must also be protected and handled according to its value. At the same time, suitable mechanisms must be defined to make data transferable and applicable between parties. This is the only way to ensure data fairness and balanced cooperation between various stakeholders in data ecosystems.

Data-processing must be comprehensible, fair and trustworthy. This is true for simple algorithms as well as for more complex systems, up to autonomously acting systems.

Proactive transparency for users and all other stakeholders is needed. This includes transparency of the principles that underlie digital products, services, and processes, and transparency of the digital solution and its components.

Especially in the digital space, we must protect our identity and preserve human responsibility. Preserving the multifaceted human identity must be a prerequisite for any digital development. The resulting digital products, services, and processes are human-centered, inclusive, ethically sensitive, and sustainable, maintaining human agency at all times

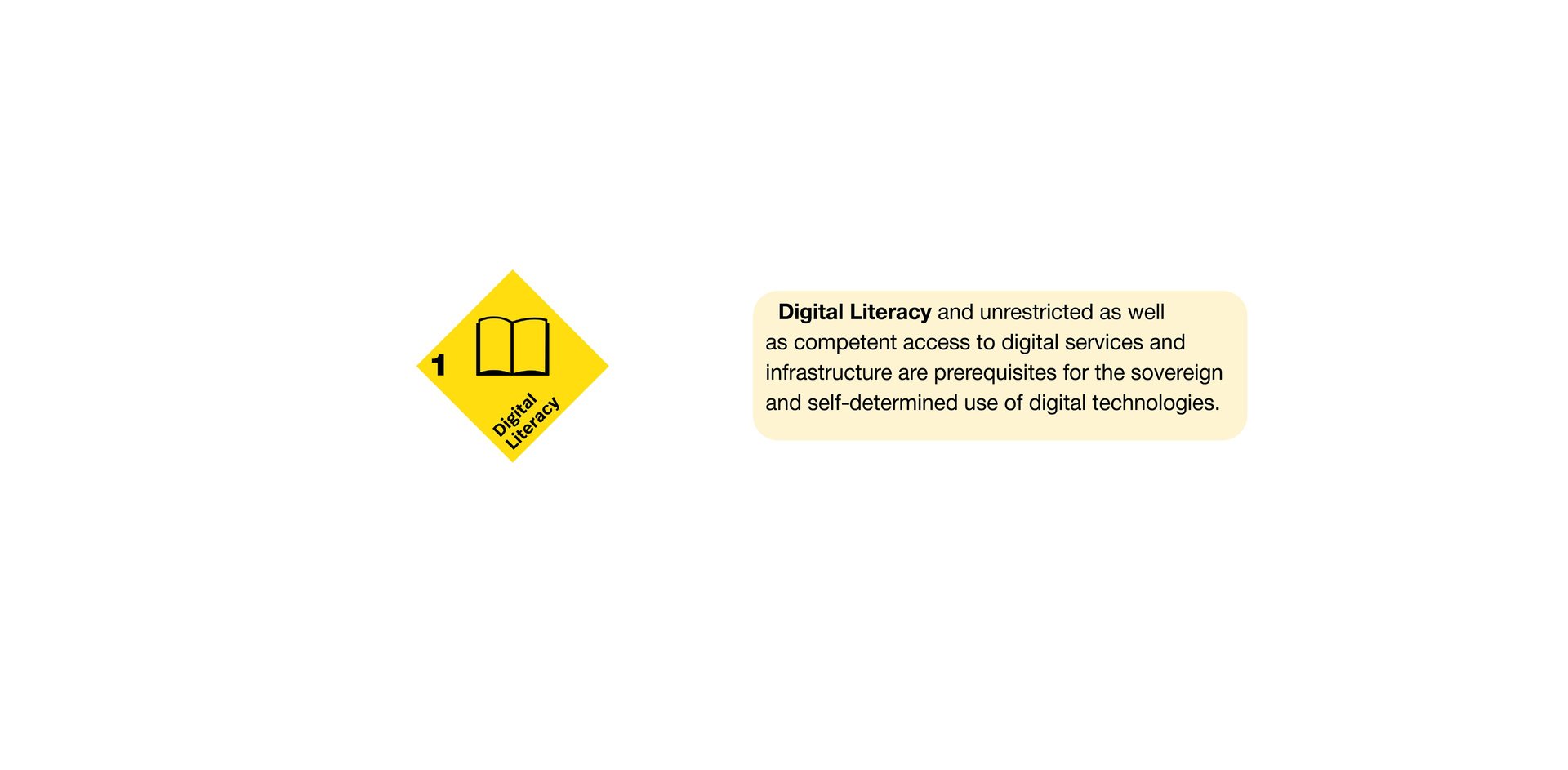

Digital Literacy and unrestricted as well as competent access to digital services and infrastructure are prerequisites for the sovereign and self-determined use of digital technologies.

DRGs at a glance

Guiding criteria

1.1 Information offered for digital products, services, and processes must be designed individually and in a way that is suitable for the target group.

4.2 In digital ecosystems the exchange of data between all parties must be clearly described and regulated. The goal must be fair participation in the benefits achieved through the exchange of data.

1.3 Acceptance of digital products, services, and processes must be proactively considered in design and operation. This includes measures on equity, diversity & inclusion.

1.2 Access to digital products, services, and processes must be reliable and barrier-free.

There are five Guiding Criteria for each individual DRG. These provide instructions on how to act in order to build a more trustworthy digital ecosystem and conform to the respective DRG.

1.4 Education on the opportunities and risks of the digital transformation is essential - everyone is entitled to education on digital matters.

1.5 Awareness for related topics such as sustainability, climate protection, and diversity/inclusion (e.g., along UN SDGs) should be raised, where applicable

2.1 Developers and providers of digital products, services and processes assume responsibility for cybersecurity. Users also bear a part of the responsibility.

2.3 A holistic view and appropriate implementation of cybersecurity are considered along the lifecycle, value chain, and the entire service or solution.

2.2 Developers and providers of digital technology are responsible for appropriate security measures and constantly develop them further. Digital technologies are designed to be resistant to compromise.

2.4 Developers and providers of digital products, services, and processes must account for how they provide security for users and their data - while maintaining trade secrets.

2.5 Business, politics, authorities, civil society and science must collaboratively shape the objectives and measures of cybersecurity. This requires open and transparent cooperation and disclosure.

3.1 Developers and providers of digital products, services, and processes must take responsibility for protecting the privacy of their users.

3.2 When dealing with personal data basic principles of data protection are respected, in particular strict purpose limitations and data minimisation.

3.4 Users have control over their personal data and their use - including the rights to access, rectify, erase, data portability, restrict processing and avoid automated decision-making.

3.5 Providers must account for how they protect users‘ privacy and personal data - while maintaining necessary trade secrets.

3.3 Privacy protection is considered throughout the entire lifecycle and should be considered a default setting.

4.1 When collecting or reusing data, proactive care is taken to ensure the integrity of the data, considering whether any gaps, inaccuracies or bias might exist.

4.3 Developers and providers of digital technologies must clearly define and communicate the purpose with which they use and process data (including non-personal data).

4.4 When providing or creating datasets the “FAIR” data principles are satisfied, especially in cases where re-use would benefit society as a whole.

4.5 Users providing or creating data must be equipped with mechanisms to control and withdraw their data - they shall have a say regarding data usage policies.

5.1 Algorithms, their application, and the datasets they are trained / used on are designed to provide a maximum of fairness and inclusion.

5.2 The individual and overall societal impact of algorithms is regularly reviewed and the review documented. Depending on the results, proportional corrective measures must be taken.

5.3 Outputs of algorithmic processing are comprehensible and explainable. Where possible outputs should be reproducible.

5.4 AI systems must be robust and designed to withstand subtle attempts to manipulate data or algorithms.

5.5 AI systems must be designed and implemented in a way that independent control of their mode of action is possible.

6.1 Organizations establish transparency - about digital products, services, and processes as well as the organization, business models, data flows, and technology employed.

6.2 Transparency is implemented through interactive communication (for example, between providers and users), and mechanisms for interaction are actively offered.

6.3 The application of digital technology is made transparent wherever there is an interaction between people and the digital technology (for example, the use of chatbots).

6.5 Organizations must outline how they will make transparency verifiable and thus hold themselves accountable for their actions in the digital space.

6.4 In addition to transparency for users, transparency should also be provided for other stakeholders (e.g., businesses, science, governments) – while maintaining trade secrets.

7.1 The preservation of the multi-faceted human identity must be the basis for any digital development. Resulting digital technologies are user-centric, respect personal autonomy, dignity, and limit commoditization.

7.2 Sustainability and climate protection must be part of design choices of digital technologies and digital business models and implemented in practice.

7.3 Digital products, services, and processes promote responsible, non-manipulative communication. Where possible, communication takes place unfiltered.

7.4 Digital technology always remains under human conception and control - it can be reconfigured throughout its deployment.

7.5 Digital technology may only be applied to benefit individuals and humankind and promote wellbeing of humanity.

HOW do the DRGs work?

Our DRGs provide a comprehensive, thee-tiered approach to creating a more trustworthy digital ecosystem:

Intuitive Visual Framework - At the highest level, each DRG represents a critical dimension of the digital world that requires improvement. We've distilled complex digital challenges into recognizable icons, making it easy for everyone—from consumers to CEOs—to identify the areas where action is needed most.

Clear Guidelines for Implementation - The middle tier features Guiding Criteria that provide businesses and developers with practical instructions. These criteria outline specific actions needed to build trust and align with each goal, empowering your organization to make responsible choices at every development stage.

Measurable Outcomes for Accountability - Our foundation layer includes precise metrics and indicators that allow for objective evaluation of digital applications. This quantifiable approach enables detailed assessment of how well solutions meet our guiding criteria, ranking and comparison of digital products through our Digital Responsibility Index and clear differentiation between responsible and questionable digital offerings.

Orientation for civil society, politics, industry

Orientation for consumers, users, market

Orientation for businesses, research, administration

Pre-empting negative effects of the digitalisation

By underpinning the design process and development of digital technology with guidance based on the DRGs (e.g. a "Digital Responsibility Playbook") from the beginning, negative qualities of those technologies can be pre-empted. This follows the logical assumption that as soon as a technology is already on the market and established, it becomes exponentially harder to “repair” any potentially damaging qualities of that technology. Think of social media: this digital technology was invented in the early 2000s, today we are still trying to mitigate the negative effects (e.g., misinformation, harm to mental health, privacy infringements etc.)

Responsible technology as viable and sustainable business model

Demand for responsible and trustworthy digital technology is on the rise.[1] The DRGs and their guiding criteria are particularly suited to be integrated into business models for trusted technology. As responsible technology becomes more valued on the market and in society, companies will have an incentive to follow Identity Valley's playbook.

Win-win: More trust, higher adoption

Data shows that there is a correlation between trust in technology and adoption.[2] It can be reasonably assumed that digital technology, that is verifiably trustworthy, creates lower hurdles for adoption. Consequently, utilising the DRG framework can create a win-win situation: digital technology that is more aligned with human and societal needs and will therefore be more widely adopted.

WHY do we need the DRGs?

By utilising the DRGs and their guiding criteria as ideological pillars in the conception, development and operation of digital technologies these negative effects can be mitigated and trustworthiness "by design" promoted.

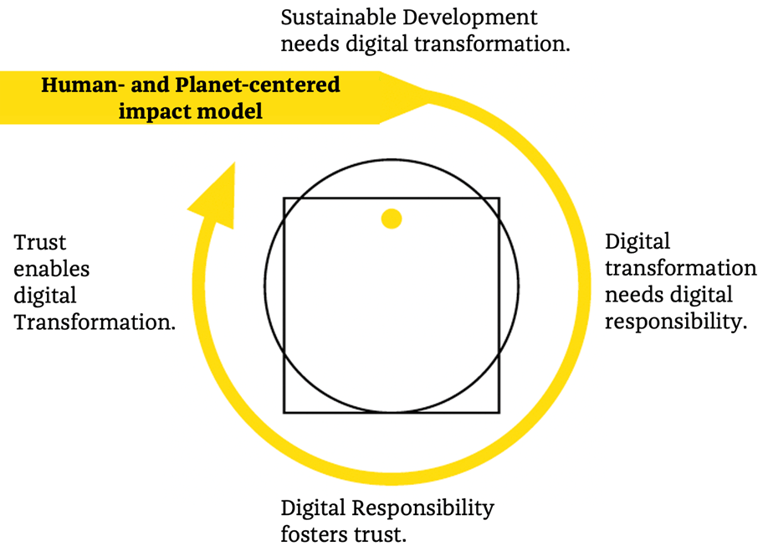

On a grand scale, the Digital Responsibility Goals are the driving force of a human- and planet-centred impact model: the current digital transformation is not sustainable. There is a lack of digital responsibility to build the necessary trust. The DRGs are shaping a responsible and trustworthy digital space - as the basis for sustainable development.

Undisputedly, digital technology is not inherently dangerous or damaging to society.

On the contrary, a lot of now ubiquitous digital technologies have positive impacts on, for example, the availability of information, effectiveness of communication or transnational (research) collaboration. Yet the negative impacts of many digital technologies on democracy, human rights, mental health of minors or social cohesion have become increasingly evident and consequential in past years.

For general questions:

info@identityvalley.org

For job-related questions:

jobs@identityvalley.org

Contact

Follow us @

In all our activities, we strive to use trustworthy digital technology in order to act in the most digitally responsible way. Occasionally, if there is no alternative we still resort to less value-based digital solutions. Yet, we are continuously working towards a world where trustworthy solutions are ever more widely available.

© Identity Valley 2025